febr

You don’t need better AI. You need better systems around it.

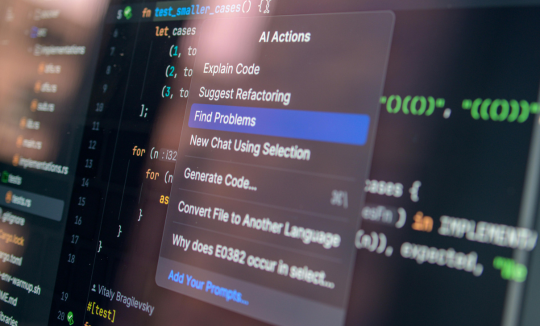

Right now, everyone is talking about AI agents. Automated workflows, smart assistants, autonomous decision-making. The story is simple and appealing: connect a few tools, spin up an agent, and suddenly your business starts running on autopilot.

Reality tends to be much less glamorous.

Most AI initiatives don’t stall because the technology isn’t good enough. They stall because they run straight into everyday business chaos. Fragmented data, half-defined processes, unclear ownership, missing guardrails. In that environment, even the most advanced agent quickly loses its magic and turns into something far less impressive: just another chatbot with big ambitions.

A lot of the conversation around AI today focuses on trust. Can we trust the outputs? Are the models accurate? Are the predictions reliable?

These are important questions. But that’s not where trust actually begins.

In practice, trust starts much earlier. It starts with where your data comes from, who owns it, how often it’s updated, and what happens when something unexpected shows up. Real trust isn’t about getting a good answer once in a while. It’s about predictability. Teams need to understand how decisions are made, when humans step in, how edge cases are handled, and how outcomes are monitored over time. Without this operational clarity, AI systems feel opaque and fragile, even if they perform well in demos.

When AI agents move into production, we keep seeing the same patterns repeat themselves.

Very often, there isn’t actually a clear process to automate. Sales qualification works differently across regions. Support routing depends on tribal knowledge. Account information lives in spreadsheets and inboxes. AI doesn’t fix this. It simply exposes it. An agent can’t stabilize chaos, it amplifies it.

Another common issue is the lack of a human fallback. Many implementations assume the agent will handle everything. But real-world operations always come with exceptions: missing data, unusual customer requests, conflicting signals. Without a clear handoff to people, confidence disappears quickly. Sometimes a single bad experience is enough for a team to disengage entirely.

Then there’s monitoring. AI is not a set-and-forget system. Data changes, models drift, business priorities evolve. If no one is actively watching outcomes and performance, quality degrades quietly in the background. By the time problems become visible, trust is already damaged.

None of this means AI agents don’t work. In fact, there are plenty of areas where they already deliver real value. Lead qualification, account research, drafting emails or proposals, routing internal requests, or handling the first pass of customer inquiries are all great examples. What they have in common is simple: AI handles volume, humans handle judgment. When agents accelerate people instead of replacing them, adoption is smoother and impact lasts longer. Teams don’t feel displaced, they feel supported.

The real shift isn’t about choosing the right model or framework. It’s about moving from AI tools to AI systems.

That means deliberately designing workflows, building reliable data foundations, defining ownership and governance, creating escalation paths, and putting feedback loops in place. An AI agent on its own is just automation with better branding. Real impact happens when AI becomes part of a broader operational design, not something bolted on at the end.

The teams making real progress rarely start with grand “AI transformation” programs. They start small. One workflow. One agent. One measurable outcome. They learn, iterate, and expand. They observe where humans need to stay involved, improve data quality, and refine handoffs. Most importantly, they build trust internally first.

Because before customers trust your AI, your own teams have to.

What surprises many organizations is that models, APIs, and integrations are usually not the hardest part. The real work lives in process design, data ownership, and organizational alignment. That’s where AI either becomes a meaningful capability or quietly joins the list of unfinished initiatives.

The future isn’t just about smarter agents. It’s about building systems where humans and AI work together in predictable, transparent ways.

If you’re experimenting with AI agents in sales or operations, you’re probably already seeing this: technology is only the beginning of the story. Everything around it matters far more.

We’re actively collecting real-world patterns on what works and what doesn’t when AI meets actual business processes. If you’re navigating this space too, we’re always happy to exchange notes.